vLLM

Getting Started with Pruna & vLLM

In this guide, you will learn how to use vLLM to serve your pruna models. vLLM is a high-performance and easy-to-use serving engine for LLMs.

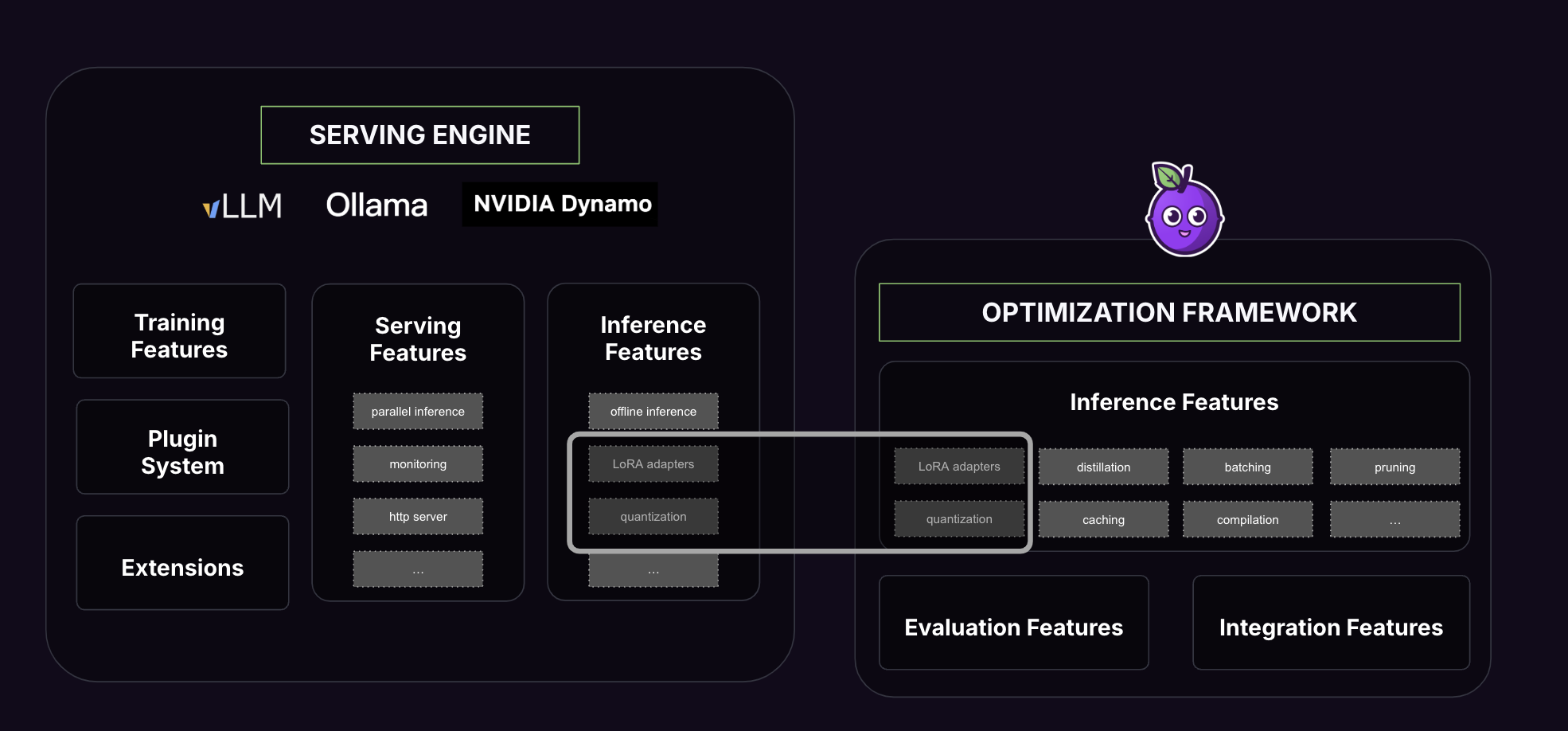

While vLLM focuses on optimizing the serving environment (distributed inference, throughput, infra-level tweaks), Pruna specializes in model-level optimizations. You can use Pruna to prepare models with supported quantization methods for vLLM, but Pruna’s real edge is in combining multiple optimization techniques—going beyond what serving platforms alone can offer. For the most flexibility and performance, Pruna should be used alongside adaptable serving engines or as the optimization layer in your stack.

Step 1. Installation

To use vLLM, you’ll need to make sure you can install pruna and its dependencies. You can take a look at the installation guide or Dockerfile for more information.

vLLM can simpy be installed with the following command:

pip install vllm

Now, let’s see how to use vLLM to serve your pruna models.

Step 2. Understand the Basics of vLLM

vLLM is a high-performance and easy-to-use serving engine for LLMs. For now, vLLM can only take as input a model path. Hence, you have to give it a path to your huggingface model, or a local path to a your pruna optimized model. To get started, you can take a look at the vLLM documentation.

Step 3. Optimize your model

To optimize your model, you can use the following code:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

from pruna import SmashConfig, smash

smash_config = SmashConfig()

smash_config['quantizer'] = 'llm_int8' # or gptq

smash_config['llm_int8_weight_bits'] = 4

model = smash(

model=model,

smash_config=smash_config,

)

model.save_pretrained("path/to/pruna/model")

Step 4. Serve your pruna model

You can then simply start the server with the following command:

from vllm import LLM, SamplingParams

llm = LLM(model="path/to/pruna/model")

sampling_params = SamplingParams(temperature=0.8, top_p=0.95, min_tokens=100, max_tokens=100)

prompt = "Hello, how are you?"

outputs = llm.generate(prompt, sampling_params)

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}") # noqa: T201

vllm serve path/to/pruna/model

Note

Don’t forget to add the model files and the config.json in your container if your are using Docker (see this github issue for more details).

This is a simple example of how to serve an pruna model with vLLM but there are many more configurations options, like batching or prefill chunking. In the vLLM documentation you can find more examples.

Ending Notes

In this guide, we have seen how to use vLLM to serve your pruna models. vLLM is a powerful tool that can help you deploy your models quickly and easily. You can deploy even faster LLMs with pruna_pro! Have a look at the pruna_pro guide to learn more about pruna_pro, and the dedicated tutorial page to learn how to deploy your models with pruna_pro. If you have any questions or feedback, please don’t hesitate to contact us.